Measures / Countermeasures

A couple of days ago it appeared that a company called Renew are trialling tracking smartphones using a device called the Presence Orb ("A cookie for the read world") placed in recycling bins across the City of London.

The mechanism by which they do this hasn't been discussed in much detail in the media, so I thought I'd have a go at unpacking it.

Every device that connects to a wired or wireless network has a Media Access Control (MAC) address, that uniquely identifies it. It's unique worldwide, and written into the read-only memory on a chip.

The MAC address is 48-bit number, usually described as six pairs of hexadecimal digits, such as 01:23:45:67:89:ab. The first three pairs are a manufacturer specific prefix, so Apple has 00:03:93, Google has 00:1A:11 and so on. The list is freely available and many manufacturers have multiple prefixes.

MAC addresses are only used for communicating within devices on the same network, and they're not visible outside of that (on the wider internet, for example).

Your smartphone, knowing that you prefer to be on a fast wireless network rather than 3G, will regularly ask nearby wireless networks to announce themselves, and if it finds one you've saved previously, it'll connect to it automatically.

This is called a probe request, and like all packets your device sends it contains your MAC address, even if you don't connect to a network. They're sent every few seconds if you're actively using the phone, or every minute or so if it's on standby with the display off. The frequency varies between hardware and operating system.

By storing the MAC address and tracking the signal strength across a number of different receivers, you can get an estimate of positioning, which will be reasonably accurate along a 1D line like a street, and less so in a more complex 2D space.

Renew claim that during a single day in the trial period they detected 106,629 unique devices across 946,016 data points from 12 receivers. Given the entire working population of the City of London is ~350,000, that seems high, but I guess they're not mentioning that they pick up everyone in a passing bus, taxi or lorry too.

They make sure to mention that the MAC address doesn't reveal a name and address or other data about an individual, but because your MAC address never changes, as coverage grows you could be tracked from your bus stop, to your tube station, and out the other side, to your office. And then that could be correlated against store cards using timestamps to tie to a personal identity. Or perhaps you'd like to sign into a hotspot using Facebook? And so on.

Of course, you can opt-out.

But here's the thing. Even though the MAC address is baked into the hardware, you can change it.

On OS X, you'd do something like this to generate a random MAC address:

openssl rand -hex 6 | sed 's/(..)/1:/g; s/.$//'And then to change it:

sudo ifconfig en0 ether 00:11:22:33:44:55Replacing 00:11:22:33:44:55 with the address you just generated. On most devices it'll reset back the the default when the machine is restarted.

(This technique will also let you keep using hotspots with a 15 minute free period. Just rotate your MAC and connect again.)

To all intents and purposes, you are now on a different device on the network. And if you didn't care about using your wireless connection for anything useful, you could run a script to rotate that every few seconds, broadcasting probe requests to spoof any number of devices, from any manufacturer(s) you wish to be.

The same packet that contains your MAC address also contains a sequence number, an incrementing and rotating 12-bit integer used to check ordering of messages. The Presence Orb could use that to discard a series of messages that follow a sequence. In turn, you could randomise that.

They might check signal strength, discarding multiple messages with similar volume. In turn, you'd randomise power output, appearing to be a multitude of distances from the receiver.

Then there's beam forming antennas, scattering off buildings and so on, to ruin attempts to trilaterate your signal. Stick all this on a Raspberry Pi, put it in a box, plug it to a car battery, tuck it under an alcove, and walk away.

If you ensure your signal strength is kept within bounds, and traffic low enough not to disrupt other genuine users of nearby wireless networks, I believe this is legal, and it'd effectively ruin Renew's aggregate data, making traffic analysis impossible.

It's still unclear whether what Renew is doing is legal. I am not a lawyer, but I suspect we'd need a clarification from the ICO as to whether the combination of MAC address and location is personal information and regulated by the Data Protection Act, as is suggested by the EU's Article 29 Working Party.

It seems likely that the law will be a step behind location tracking technology, for a while at least. And while that's the case, chaff is going to be an important part of maintaining privacy. The tools are there to provide it, if we want to.

Project Looking Glass

Introduction

Newspaper Club has two offices: one in Glasgow and one in London. Glasgow is the HQ, where all the customer service, logistics and operational stuff happens. And in London, we develop and manage all the products and services, designing the site, writing code and so on.

We chat all day long between us in a couple of Campfire rooms, and we're not at the size where that's a bottleneck or difficult to manage. But it's nice to have a more ambient awareness of each other's comings and goings, especially on the days when we're all heads down in our own work, and there isn't as much opportunity to paste funny videos in Campfire.

I wanted to make a something to aid that. A two-way office-to-office video screen. I wanted it to be always on, with no dialling up required, and for it to automatically recover from network outages. I wanted the display to be big, but not intrusive. I didn't want a video conference. I didn't want people to be able to log in from home, or look back through recorded footage. I wanted to be able to wave at the folks in the other office every morning and evening, and for that feel normal.

Here's what we came up with:

There's a Raspberry Pi at each end, each connected to a webcam and a monitor. You should be able to put a pair together for under <£150, if you can find a spare monitor or two. There's no sound, and the video is designed to look reasonable, while being tolerant of a typical office's bandwidth constraints.

Below, I'll explain how you can make one yourself.

There's obvious precedence here, the most recent of which is BERG's Connbox project (the writeup is fantastic — read it!), but despite sharing studio space with them, we'd never actually talked about the project explicitly, so it's likely I just absorbed the powerful psychic emanations from Andy and Nick. Or the casual references to GStreamer in the kitchen.

Building this has been a slow project for me, tucked into odd evenings and weekends over the last year. It's been through a few different iterations of hardware and software, trying to balance the price and availability of the parts, complexity of the setup, and robustness in operation.

I really wanted it to be cheap, because it felt like it should be. I knew I could make it work with a high spec ARM board or an x86 desktop machine (that turned out to be easy), but I also knew all the hardware and software inside a £50 Android phone should be able manage it, and that felt more like the scale of the thing I wanted to build. Just to make a point, I guess.

I got stuck on this for a while, until H264 encoding became available in Raspberry Pi's GPU. Now we have a £25 board that can do hardware accelerated simultaneous H264 encoding/decoding, with Ethernet, HDMI and audio out, on a modern Linux distribution. Add a display and a webcam, and you're set.

The strength of the Raspberry Pi's community is not to be understated. When I first used it, it ran an old Linux kernel (3.1.x), missing newer features and security fixes. The USB driver was awful, and it would regularly drop 30% of the packets under load, or just lock up when you plugged the wrong keyboard in.

Now, there's a modern Linux 3.6 kernel, the USB driver seems to be more robust, and most binaries in Raspbian are optimised for the CPU architecture. So, thank you to everyone who helped make that happen.

Building One Yourself

The high level view is this: we're using GStreamer to take a raw video stream from a camera, encode it into H264 format, bung that into RTP packets over UDP, and send those at another machine, where another instance of GStreamer receives them, unpacks the RTP packets, reconstructs the H264 stream, decodes it and displays it on the screen.

Install Raspbian, and using raspi-config, set the GPU to 128MB RAM (I haven't actually tried it with 64MB, so YMMV). Do a system upgrade with sudo apt-get update && sudo apt-get dist-upgrade, and then upgrade your board to the latest firmware using rpi-update, like so:

sudo apt-get install git-core

sudo wget http://goo.gl/1BOfJ -O /usr/bin/rpi-update && sudo chmod +x /usr/bin/rpi-updateReboot, and you're now running the latest Linux kernel, and all your packages are up to date.

GStreamer in Raspbian wheezy is at version 0.10, and doesn't support the OMX H264 encoder/decoder pipeline elements. Thankfully, Defiant on the Raspberry Pi forums has built and packaged up GStreamer 1.0, including all the OMX libraries, so you can just apt-get the lot and have it up and running in a few seconds.

Add the following line to /etc/apt/sources.list:

deb http://vontaene.de/raspbian-updates/ . mainAnd then install the packages:

sudo apt-get update

sudo apt-get install gstreamer1.0-omx gstreamer1.0-plugins-bad gstreamer1.0-plugins-base gstreamer1.0-plugins-good gstreamer1.0-plugins-ugly gstreamer1.0-tools gstreamer1.0-xWe also need the video4linux tools to interface with the camera:

sudo apt-get install v4l-utils v4l-confTo do the actual streaming I've written a couple of small scripts to encapsulate the two GStreamer pipelines, available in tomtaylor/looking-glass on GitHub.

By default they're set up to stream to 127.0.0.1 on port 5000, so if you run them both as the same time, from different consoles, you should see your video pop up on the screen. Even though this doesn't look very impressive, you're actually running through the same pipeline that works across the local network or internet, so you're most of the way there.

The scripts can be configured with environment variables, which should be evident from the source code. For example, HOST="foo.example.com" ./video-server.sh will stream your webcam to foo.example.com to the default port of 5000.

To launch the scripts at boot time we've been using daemontools. This makes it easy to just reboot the Pi if something goes awry. I'll leave the set up of that as an exercise for the reader.

You don't have to use a Raspberry Pi at both ends. You could use an x86 Linux machine, with GStreamer and the various codecs installed. The scripts support overriding the encoder, decoder and video sink pipeline elements to use other elements supported on your system.

Most x86 machines have no hardware H264 encoder support, but we can use x264enc to do software encoding, as long as you don't mind giving over a decent portion of CPU core. We found this works well, but needed some tuning to reduce the latency. Something like x264enc bitrate=192 sync-lookahead=0 rc-lookahead=10 threads=4 option-string="force-cfr=true" seemed to perform well without too much lag. For decoding we're using avdec_h264. In Ubuntu 12.04 I had trouble getting eglglessink to work, so I've swapped it for xvimagesink. You shouldn't need to change any of this if you're using a Pi at both ends though - the scripts default to the correct elements.

Camera wise, in Glasgow we're using a Logitech C920, which is a good little camera, with a great image, if a touch expensive. In London it's a slightly tighter space, so we're using Genius Widecam 1050, which is almost fisheye in angle. We all look a bit skate video, but it seemed more important to get everyone in the shot.

You'll probably also need to put these behind a powered USB hub, otherwise you'll find intermittent lockups as the Raspberry Pi can't provide enough power to the camera. It's not the cheapest, but the Pluggable 7-port USB hub worked well for us.

The End

It works! The image is clear and relatively smooth - comparable to Skype, I'd say. It can be overly affected by internet weather, occasionally dropping to a smeary grey mess for a few seconds, so it definitely needs a bit of tuning to dial in the correct bitrate and keyframe interval for lossy network conditions. It always recovers, but can be a bit annoying while it works itself out.

And it's fun! We hung up a big "HELLO GLASGOW" scrawled on A3 paper from the ceiling of our office, and had a good wave at each other. That might get boring, and it might end up being a bit weird, and if so, we'll turn it off. But it might be a nice way to connect the two offices without any of the pressures of other types of synchronous communication. We'll see how it goes.

If you make one, I'd love to hear about it, especially if you improve on any of the scripts or configuration.

Roll Five

Print Production with Quartz and Cocoa

I wrote a post on the Newspaper Club blog the other day about ARTHR & ERNIE, our systems for making a newspaper in your browser.

One of the things I touched on was Quartz, part of Cocoa's Core Graphics stack, and how we use it to generate fast previews and high-quality PDFs on the fly, as a user designs their paper.

If you need to do something similar, even if it's not in real-time, Quartz is a great option. Unlike the PDF specific generation libraries, such as Prawn, it's fast and flexible, with a great quality typography engine (Core Text). And unlike the lower-level rasterisation libraries, like Cairo and Skia, it supports complex colour management with CMYK support. The major downside is that you need to run it on Mac OS X, for which hosting is less available and slightly arcane.

It took a lot of fiddling to understand exactly how to best use all the various APIs, so I thought it might be useful for someone if I just wrote down a bit of what I learnt along the way.

I'm going to assume you know something about Cocoa and Objective-C. All these examples run on Mac, but apart from the higher level Core Text Layout System, the same APIs should be available on iOS too. They assume ARC support.

Generating Preview Images

Let's say we have an NSView hierarchy, containing things like an NSImageView or an NSTextView.

Generating an NSImage is pretty easy - you render the NSView into an NSGraphicsContext backed by an NSBitmapImageRep, like so:

- (NSImage *)imageForView:(NSView *)view width:(float)width {

float scale = width / view.bounds.size.width;

float height = round(scale * view.bounds.size.height);

NSString *colorSpace = NSCalibratedRGBColorSpace;

NSBitmapImageRep *bitmapRep;

bitmapRep = [[NSBitmapImageRep alloc] initWithBitmapDataPlanes:nil pixelsWide:width pixelsHigh:height bitsPerSample:8 samplesPerPixel:4 hasAlpha:YES isPlanar:NO colorSpaceName:colorSpace bitmapFormat:0 bytesPerRow:(4 * width) bitsPerPixel:32];

NSGraphicsContext *graphicsContext;

graphicsContext = [NSGraphicsContext graphicsContextWithBitmapImageRep:bitmapRep];

[graphicsContext setImageInterpolation:NSImageInterpolationHigh];

CGContextScaleCTM(graphicsContext.graphicsPort, scale, scale);

[pageView displayRectIgnoringOpacity:view.bounds inContext:graphicsContext];

NSImage *image = [[NSImage alloc] initWithSize:bitmapRep.size];

[image addRepresentation:bitmapRep];

return image;

}You can then convert this to a JPEG or similar for previewing.

NSBitmapImageRep *imageRep = [[image representations] objectAtIndex:0];

NSData *bitmapData = [imageRep representationUsingType:NSJPEGFileType properties:nil];Generating PDFs

Generating a PDF is easy too, given an NSArray of views in page order.

- (NSData *)pdfDataForViews:(NSArray *)viewsArray {

NSMutableData *data = [NSMutableData data];

CGDataConsumerRef consumer;

consumer = CGDataConsumerCreateWithCFData((__bridge CFMutableDataRef)data);

// Assume the first view is the same size as the rest of them

CGRect mediaBox = [[views objectAtIndex:0] bounds];

CGContextRef ctx = CGPDFContextCreate(consumer, &mediaBox, nil);

CFRelease(consumer);

NSGraphicsContext *gc = [NSGraphicsContext graphicsContextWithGraphicsPort:ctx flipped:NO];

[viewsArray enumerateObjectsUsingBlock: ^(NSView *pageView, NSUInteger idx, BOOL *stop) {

CGContextBeginPage(ctx, &mediaBox);

CGContextSaveGState(ctx);

[pageView displayRectIgnoringOpacity:mediaBox inContext:gc];

CGContextRestoreGState(ctx);

CGContextEndPage(ctx);

}];

CGPDFContextClose(ctx);

CGContextRelease(ctx);

return data;

}Quartz maps very closely to the PDF format, making the PDF rendering effectively a linear transformation from Quartz's underpinnings. But Apple's interpretation of the PDF spec is odd in ways I don't quite understand, and can cause some problems with less flexible PDF parsers, such as old printing industry hardware.

To fix this, we post process the PDF in Ghostscript, taking the opportunity to reprocess the images into a sensible maximum resolution for printing (150 DPI in our case). We end up with a file in PDF/X-3 format, a subset of the PDF spec recommended for printing.

- (NSData *)postProcessPdfData:(NSData *)data {

NSTask *ghostscriptTask = [[NSTask alloc] init];

NSPipe *inputPipe = [[NSPipe alloc] init];

NSPipe *outputPipe = [[NSPipe alloc] init];

[ghostscriptTask setLaunchPath:@"/usr/local/bin/gs"];

[ghostscriptTask setCurrentDirectoryPath:[[NSBundle mainBundle] resourcePath]];

NSArray *arguments = @[ @"-sDEVICE=pdfwrite", @"-dPDFX", @"-dSAFER", @"-sProcessColorModel=DeviceCMYK", @"-dColorConversionStrategy=/LeaveColorUnchanged", @"-dPDFSETTINGS=/prepress", @"-dDownsampleColorImages=true", @"-dDownsampleGrayImages=true", @"-dDownsampleMonoImages=true", @"-dColorImageResolution=150", @"-dGrayImageResolution=150", @"-dMonoImageResolution=150", @"-dNOPAUSE", @"-dQUIET", @"-dBATCH", @"-P", // look in current dir for the ICC profile referenced in PDFX_def.ps @"-sOutputFile=-", @"PDFX_def.ps", @"-"];

[ghostscriptTask setArguments:arguments];

[ghostscriptTask setStandardInput:inputPipe];

[ghostscriptTask setStandardOutput:outputPipe];

[ghostscriptTask launch];

NSFileHandle *writingHandle = [inputPipe fileHandleForWriting];

[writingHandle writeData:data];

[writingHandle closeFile];

NSFileHandle *readingHandle = [outputPipe fileHandleForReading];

NSData *outputData = [readingHandle readDataToEndOfFile];

[readingHandle closeFile];

return outputData;

}PDFX_def.ps is a Postscript file, used by Ghostscript to ensure the file is X/3 compatible.

CMYK Images

Because Quartz maps so closely to the PDF format, it won't do any conversion of your images at render time. If you have RGB images and CMYK text you'll end up with a mixed PDF.

Converting an NSImage from RGB to CMYK is easy though:

NSColorSpace *targetColorSpace = [NSColorSpace genericCMYKColorSpace];

NSBitmapImageRep *targetImageRep;

if ([sourceImageRep colorSpace] == targetColorSpace) {

targetImageRep = sourceImageRep;

} else {

targetImageRep = [sourceImageRep bitmapImageRepByConvertingToColorSpace:targetColorSpace renderingIntent:NSColorRenderingIntentPerceptual];

}

NSData *targetImageData = [targetImageRep representationUsingType:NSJPEGFileType properties:nil];

NSImage *targetImage = [[NSImage alloc] initWithData:targetImageData];Multi-Core Performance

Normally in Cocoa, all operations that affect UI should happen on main thread. However, we have some exceptional circumstances which means we can parallelise some of the slower bits of our code if we want to, for performance.

Firstly, our NSViews stand alone, they don't appear on screen, they're not part of an NSWindow, and they're not going to be affected by any other part of the operating system. This means we don't need to specifically use main thread for our UI operations - nothing else will be touching them.

The method that actually performs the rasterisation of an NSView is thread-safe, assuming the NSGraphicsContext is owned by the thread, but there are often shared objects behind the scenes, such as Core Text controllers. You can either take private copies of these (which seems like an opportunity to introduce some nasty and complex bugs), or you can single-thread the rasterisation process, but multi-thread everything either side of it, such as the loading and conversion of any resources beforehand and the JPEG conversion afterwards.

We use a per-document serial dispatch queue, and put the rasterisation through that, which still gives us multi-core image conversion (the slowest portion of the code).

- (NSArray *)jpegsPreviewsForWidth:(float)width quality:(float)quality {

NSUInteger pageCount = document.pages.count;

NSMutableArray *pagePreviewsArray = [NSMutableArray arrayWithCapacity:pageCount];

// Set all the elements to null, so we can replace them later.

// TODO: I'm not sure if this is necessary.

for (int i = 0; i < pageCount; i++) {

[pagePreviewsArray addObject:[NSNull null]];

}

dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0);

dispatch_queue_t serialQueue;

serialQueue = dispatch_queue_create("com.newspaperclub.PreviewsSynchronizationQueue", NULL);

dispatch_apply(pageCount, queue, ^(size_t pageIndex) {

NSData *jpegData = [self jpegForPageIndex:pageIndex width:width quality:quality];

// Synchronize access to pagePreviewsArray through a serial dispatch queue

dispatch_sync(serialQueue, ^{

[pagePreviewsArray replaceObjectAtIndex:pageIndex withObject:jpegData];

});

});

return pagePreviewsArray;

}

- (NSData *)jpegForPageIndex:(NSInteger)pageIndex width:(float)width quality:(float)quality {

// Perform rasterization on render queue

__block NSImage *image;

dispatch_sync(renderDispatchQueue, ^{

image = [self imageForPageIndex:pageIndex width:width];

});

NSBitmapImageRep *imageRep = [[image representations] objectAtIndex:0];

NSNumber *qualityNumber = [NSNumber numberWithFloat:quality];

NSDictionary *properties = @{ NSImageCompressionFactor: qualityNumber };

NSData *bitmapData = [imageRep representationUsingType:NSJPEGFileType properties:properties];

return bitmapData;

}The End

Plumping for Quartz + Cocoa to do something like invoice generation is likely to be overkill - you're probably better off with a higher level PDF library. But if you need to have very fine control over a document, to have quality typography, and to render it with near real-time performance, it's a great bet and we're very happy with it.

Roll Two

OPERATION: SHARDFOX

The observation deck in the Shard is opening tomorrow, so you'll see lots of photos from the top, if you haven't already.

I was lucky enough to have an opportunity to go round while the building was still being finished, with Ben, Chris and Russell, on a tour with one of the friendly civil engineers.

It's been a while (September 2011), so here's the bits I remember, and probably some facts I've exaggerated in the pub since then.

We went through fingerprint security and took the lift up the 50-something floor, where all our phone reception promptly stopped working. Too much signal? Too little? Regardless I laughed at the thought of buying a $25m duplex apartment and not getting any texts. Sadly, I'm sure they've fixed it by now.

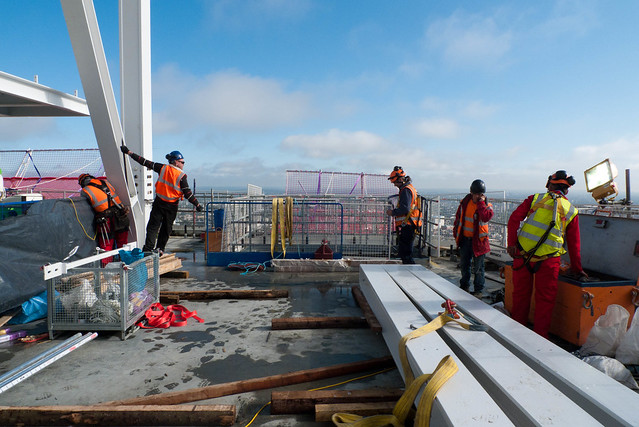

Here, some brave fellas were fitting the glass windows to the outside of the structure. It's windy up there. I could barely watch, let alone take this photo.

It's a cliché, but London really does look like a map beneath you, more compressed than you expect.

Here's a photo from the ground:

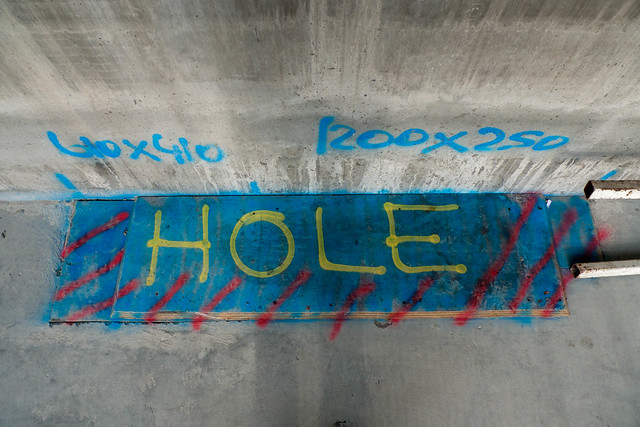

Do you see that little platform jutting out near the top on the left side of the building? It's a loading platform, used to land materials from the cranes. It's cantilevered against the floor and ceiling of that level, so it can be removed later. I think it's on the 62nd floor.

That's this:

And the view below:

Now, I'm pretty comfortable with heights, but at that point my lizard brain kicked in. I couldn't stay on the platform for long.

The lift hadn't been fitted all the way to the top then, so we clambered around the husk of steel girders and concrete floors, through puddles and piping, all the way to the 72nd floor, which is now the top floor of the observation deck.

That's Chris and Russell climbing from floor 71 to 72, on the north east corner of the building.

The building is supported on an internal concrete column. Concrete is poured into a frame, and as it sets, the frame pulls itself up on the concrete that just dried. The concrete column stops at the 72nd floor, as the building narrows and there's not a lot of usable floor space left, the next 10 floors are hollow space to give it the building its shardiness.

Our guide said that on a clear day you can see from the south coast to Norwich cathedral. It's a good pub fact, but seems a bit far to me. Still, I use it from time to time. Seems silly not to.

And finally, the view from the top:

It was a brilliant day out. More photos on Flickr.

Roll One

I shot my first roll of 35mm film in a very long time over the weekend. It was lots of fun.

While I had a film camera in my teens, by the time I was getting interested in photography again in my early twenties good digital cameras were starting to surface, so I stupidly blew my first month's pay packet at my first proper job on a Nikon D70.

This time, I picked up a Nikon FE, second hand from a nice German man on eBay. It's about the same age as me, but it's a lovely piece of machinery.

Film people, like vinyl people, go on and on about all the unique qualities of their medium that make it the One True Way. So I don't mean to sound like that, but the state of 35mm film in 2013 seems pretty good. You can still buy film on the high street (I bought some cheap Agfa stuff from Poundland!), and there seems to be plenty of good places in London that do development and scans for around a fiver. Kodak even released a new film in 2008 (Ektar 100).

It's nice to know it's all still there, but also a reminder of how quickly it all changed. It doesn't seem that long ago my Mum was posting stuff off to Bonusprint.

I think one of the attractions for me with film, aside from the mechanics, simplicity and aesthetics, was the unpredictability of it. The inability to accurately predict whether that *click* was going to turn out to be a good photograph. The odd things that happen to the photons through twenty layers of glass and chemicals. The details forgotten in the delay between shooting and processing. The serendipity, I guess.

Of all the things we're losing in the transition to digital media and online services, it still feels like this is the one that's most valuable, least recognised, and hardest to design or engineer back in. (Despite some great efforts.)

Another Post About Cycling and Maps and GPS Like All the Other Ones

Over the last few years I've been lucky enough to enjoy some really good bike rides with friends and family, through lots of bits of the UK and Europe that most people don't get to see. The best of those was Lands End to John O'Groats, but I've also done a Coast to Coast (the Way of the Roses), Amsterdam to Berlin, Canal du Midi, and plenty of day trips out of London.

I've got a nice Dawes Galaxy touring bike which I bought second hand. It's like the Volvo of bikes. Comfy for long distances, decent amount of room for hauling stuff, lots of places to attach things, and a bit on the heavy side.

Since touring is mostly about going places you've never been, without assistance, you need a way of navigating. You can use paper maps, but you'll be stopping every few minutes to check your route, or you'll be mounting a waterproof map case to the handlebars and refolding it every 10 miles.

Or you can use a GPS device. There are expensive bike specific models with cadence meters and competition modes, but those are for carbon fibre roadies, and you just want one with a screen and a simple map.

I've got an old Garmin eTrex Legend HCx, since discontinued. The battery lasts about 24 hours on a decent pair of NiMH AAs. The screen is a bit dim in sunlight, but the backlight works well when night falls. It's durable: I can guarantee that it's more waterproof than me, and I've dropped it numerous times as well as covering it in bike oil, etc.

We've usually got some paper maps for backup, but the GPS is just so useful that unless the batteries fail, or we decide to go off the route, they rarely get used.

It's mounted to the handlebars, with the official Garmin mount. Because it rattles, I have some blu-tack that I shove underneath it to dampen the vibrations. You might fare better with a third-party manufacturer's model.

On the 2GB Micro SD card I've got the whole of UK OpenStreetMap loaded. I download it from Velomap and update it every few months. It's a bit slow at rendering in built up areas, but it's totally usable and way better than buying the expensive Ordnance Survey maps which are basically the same, with more out of date bits. If you can, grab the version with the hill contours – it's useful to see them.

The GPS is great for a few things:

-

Showing you a predefined track, and keeping you on it.

-

Telling you your mileage, moving average speed, and so on.

-

Logging your route for later.

-

Working when your smartphone has run out of battery.

It is rubbish at:

-

Routing you to a waypoint. It'll often try and take you along footpaths and motorways.

-

Discovering what's around you. The 2" screen makes it difficult to see any distance at a usable level of detail, and the Points of Interest database is a complete pain to navigate. For that, use a paper map or your phone, unless you're really stuck.

Lots of well known trips have GPS tracks available for download already – just do a search for "A to B gpx" – but often you'll want to draw out a new route.

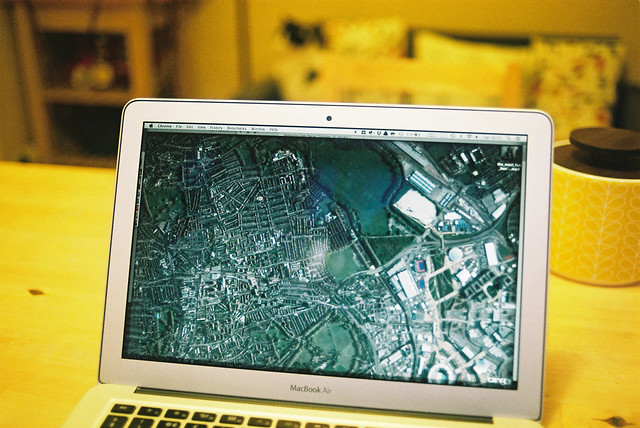

For this, I usually use Google Maps. I often plot a driving route from A to B, and then override it by dragging the route around to take it off the main roads and along quiet country lanes. It always snaps to the road network, so however you drag it you get a usable track. The topography isn't always obvious, so I look at the satellite and terrain imagery on Google Maps, to make sure I'm not routing us up an unnecessary hill or similar.

Then I export and download it as a KML file, and convert it to a GPX file with GPS Babel, before loading it onto the Garmin with the Basecamp software.

I'd much rather use an OpenStreetMap based tool for all of this, but I haven't found the right thing. Suggestions greatly appreciated!

Lots of the rides we've done from London have been absolutely brilliant, but took a while to plan, plot and prepare for the GPS. I thought it'd be helpful to put a few of the best ones on their own site so they might appear in search results, saving people some time.

The result is Good Bike Rides from London – a collection of day-rides we've done out of London, often to the coast, returning by train. There's just four routes up there at the moment, but I've got quite a few track logs still to plough through and tidy up.

Anyway, they're all good, all recommended, and all ready for sticking on your GPS and hitting the road. Happy cycling.

Update: I completely forgot to mention how useful CycleStreets is for cycle route planning, both long and short distance. I often check my customised route in Google Maps against it, and I love the altitude profiles it displays.

The Full Spectrum White Noise of the Network

I wrote this a while ago, as part of a project I was working on. It ends abruptly, as I switch to talking about stuff relevant to the project at hand.

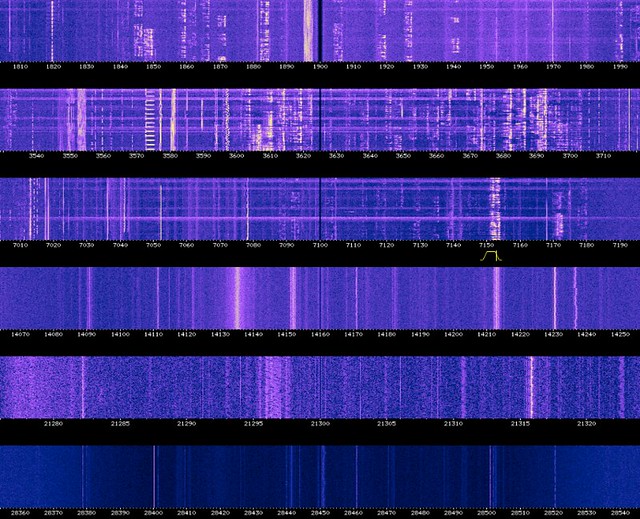

Earlier today I found myself scanning through a High Frequency WebSDR station from the University of Technology Eindhoven (don't ask), and the screenshot below reminded me of what I meant by the "full spectrum white noise of the network". The rest of the piece is nonsense, but there's something in there somewhere, and one day I'll try and work out what it is.

The phrase "cloud computing" almost certainly originates from the symbols drawn by engineers on networking diagrams. When representing the rest of the internet - the amorphous blob of computing just beyond the horizon - scribble a cloud and be done with it.

I never drew a cloud: I preferred to the draw the earth/ground symbol. You don't connect to the cloud, you ground off to the internet. It seems like a better metaphor: computing flows through physical pipes, popping up in data centres and road-side boxes and telegraph poles. The cloud is a lie.

And I never understood the "ether" in Ethernet. By taking the radiation and constraining it in a waveguide (cable), we've taken it out of the ether. It's the wireless technologies that should be called Ethernet.

I've always wondered what would have happened if we'd developed wireless networking first. If it had just happened to look easier at the time. An internet grown out of Ham Radio enthusiasts, rather than military hard lines. If cabled technology happened to be a recent development, designed to tunnel the radiation through a waveguide to get more distance and less interference.

How would that have changed the infrastructure and the topology of the network? Would the tragedy of the commons have killed it early, as we battled for the same spectrum space amongst our neighbours? Or would we have reached a steady equilibrium, careful to share this precious resource? What social or political environment would have made that possible?

And what technologies could have mediated this? If we'd never licensed the spectrum, would we have evolved devices that could negotiate between themselves, across all frequencies, in the full spectrum white noise of the network. Or would we be limited to a more local internet, rooted in the physical geography? More like to a loosely coupled collection of Wide Area Networks. "I'm trying to get a YouTube video from Manchester and it's taking bloody ages."

And would the bandwidth limitations of a shared spectrum have encouraged a internet that's halfway between broadcast and P2P? Something between pirate radio and teletext++.

Could the mobile phone be considered to be an integral, structural, deep point in the network, or something that always feels like an edge node, flitting in and out of existence? Could you pull it out of your pocket and watch the data soar through it, as packets spool in and out, trying to find their way?